Class Visualization Part 3 - Model Choice

Read Part 1: Class Visualization with Deep Learning to learn how we can get a convolutional neural network to visualize a class.

Read Part 2: Starting Image to learn how the base image affects class visualization.

In this post in the class visualization series, we will look at a few different pre-trained models and see how they change the properties of class visualization.

In the previous posts, we used Squeezenet from torchvision. For full transparency, it is created like

import torchvision

model = torchvision.models.squeezenet1_1(pretrained=True)

and creates visualizations like

![image]

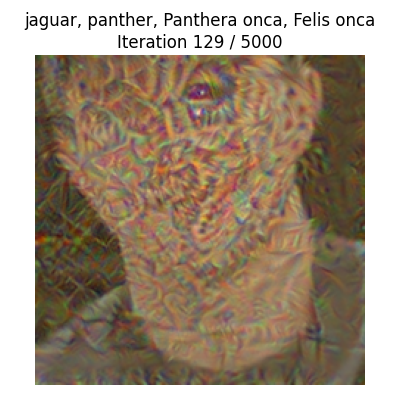

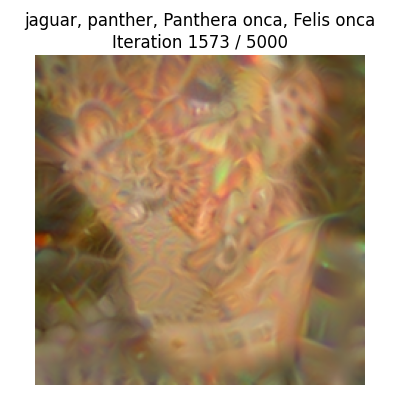

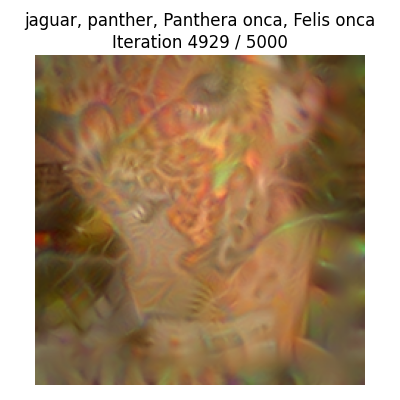

This produces model forces very abstract class visualizations that produce marquee features in the visualization, like lots of jaguar eyes and spots, without much consideration for overall realness. If we use a different model, like inception_v3or a model like mobilenet, will we get a different visualization?

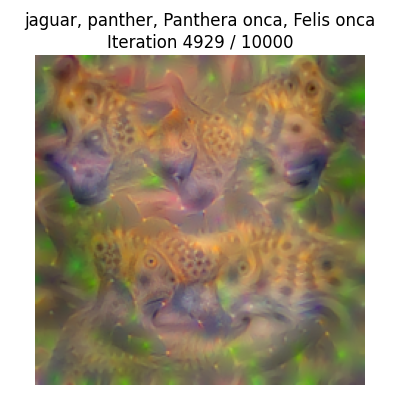

Let's start with inception_v3. This network touts higher accuracy than Squeezenet, but at the cost of more parameters.

Empirically, this model values structure far more than Squeezenet! Compared to Squeezenet, far more of the base image is used. You can clearly see an outline of my face and eyes sort of preserved. This differs greatly from the Squeezenet version.

Fun! What about Mobilenet, which