Class Visualization with Deep Learning

Convolutional neural networks learn through a process called backpropagation with gradient descent. In brief, the algorithms work as follows:

- You give the model an image.

- The model spits out a predicted class/label for the image, like "Cat" or "Dog".

- The predicted label "Cat" is compared to the real label "Dog". If the model is wrong, we scold the model and force it to change it's parameters such that it makes a better prediction on this exact image next time. This is the learning aspect of deep learning!

- Run this process over and over with many different images and their labels. The end result is a model that can classify images!

Once we have trained the model and it can correctly classify images into a predetermined set of classes, we can extend this learning parameter update outside the model. In fact, we can propagate this update all the way through the network and modify the input image.

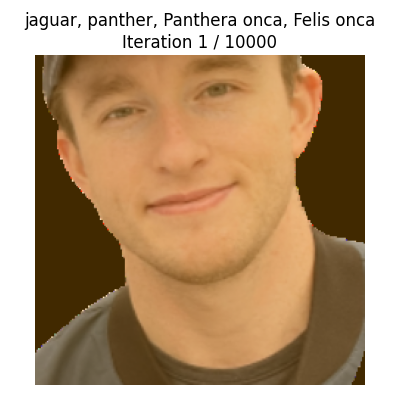

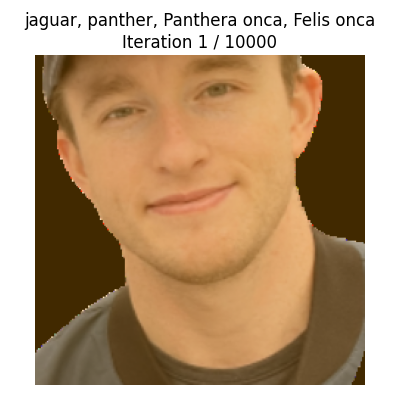

Let's think about what happens if we give our trained model this image

with the label of "Jaguar" and perform the following process:

- Freeze the model parameters so the model cannot be updated by our shenanigans

- Run the image through the model and predict a label like "Human"

- Lie to the model and say this should have been classified as a "Jaguar"

- Backpropagate this correction (this image is a Jaguar!!!) through the model

- Take the output of this backpropagation at the input layer, which will have the same shape as the input image and call it the image delta

- Paste this delta to the original image

- Repeat, sending the modified image to the model and continuously updating it

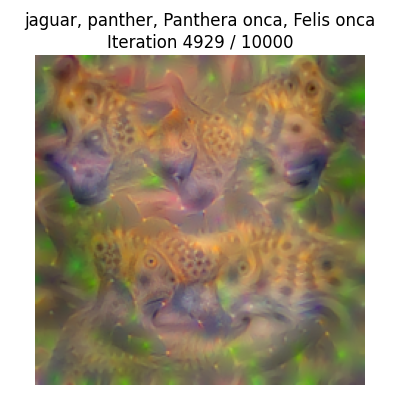

The result is a transformation of the original image into something that the model will say is a Jaguar with very high confidence.

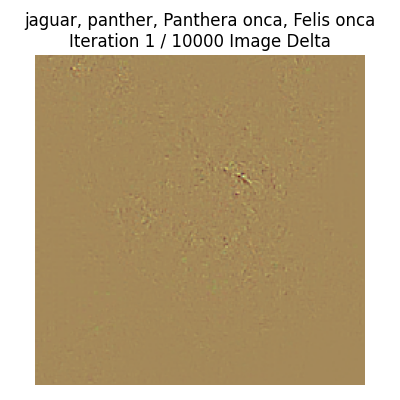

So what does this little delta we apply to the image each iteration look like?

Not much, really.

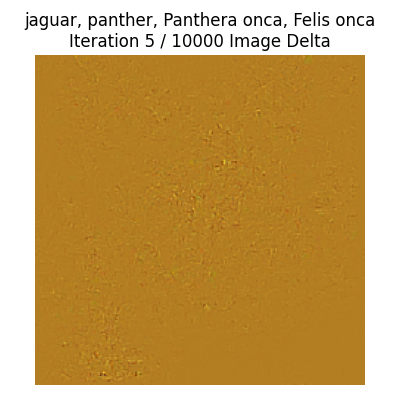

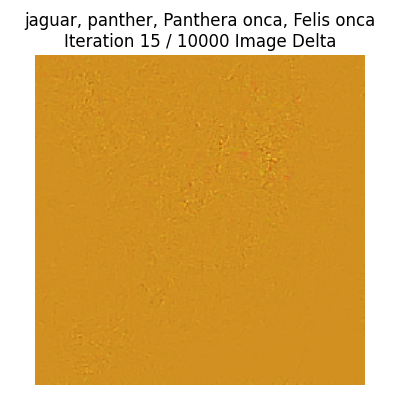

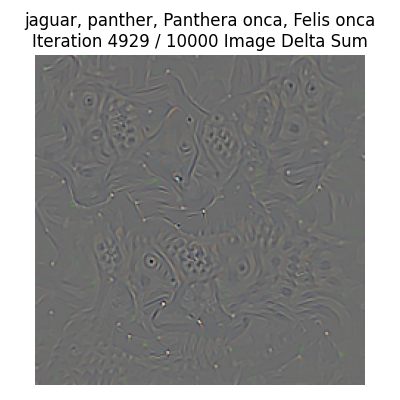

But if we apply the image delta to itself each iteration, thus summing the deltas up and looking at them on aggregate...

We start to see how our model is creating a mask over our image that does not look like a jaguar, but has a lot of jaguar features! Then applying this mask to the original image (each iteration), we yield

And that is how we can get a neural network to visualize a class!

Lessons Learned

There's a lot that can be learned just by seeing what the neural network will accept as a jaguar. Clearly, realism is not a concern, but the hallmark features, like eyes, face-ish, jaguar print, are very critical.

More

Read Part 2 to see how the starting image and model influence the results.