Class Visualization Part 2 - Starting Image

Read Part 1: Class Visualization with Deep Learning to learn how we can get a convolutional neural network to visualize a class.

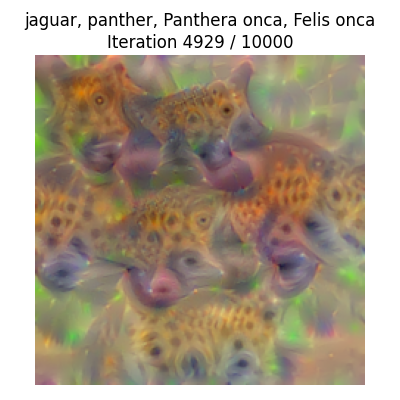

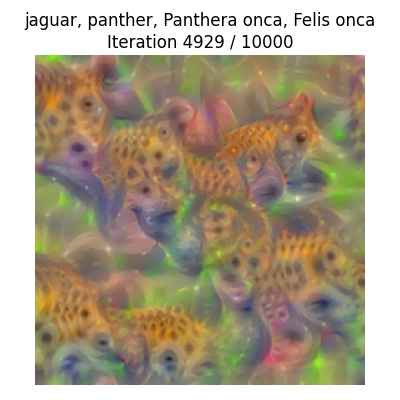

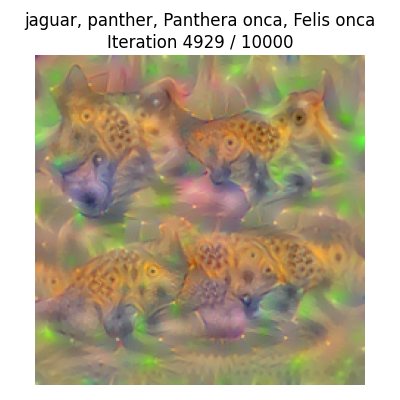

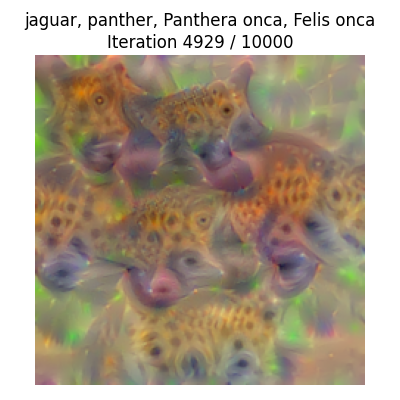

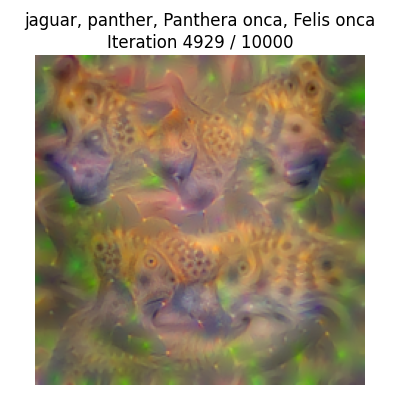

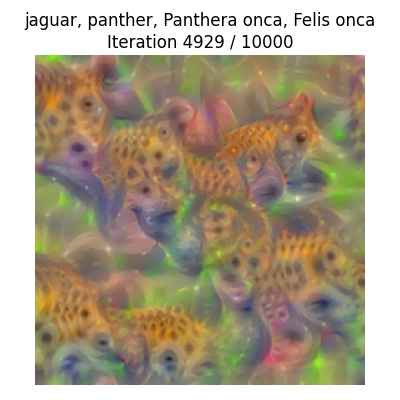

As we saw in part 1, we can visualize a class using any base image like so

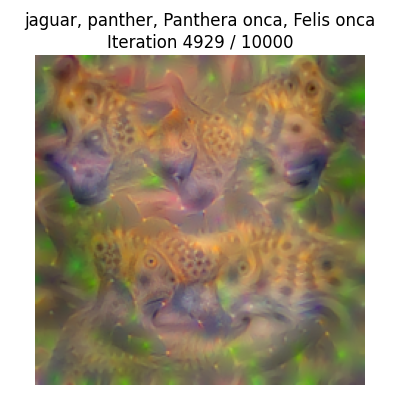

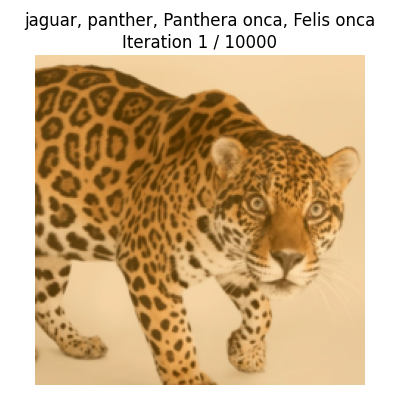

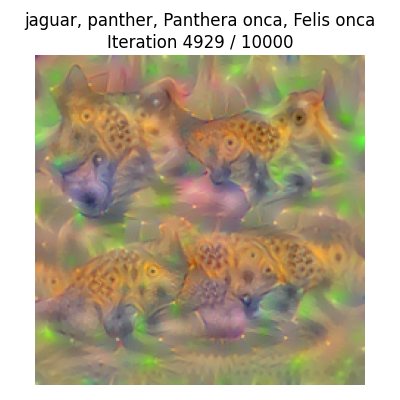

The visualization takes off running with whatever foothold it can get here. For example, my eyes quickly turn to jaguar spots and grow from there, with each successive iteration building off the last. This changes if we start with a different image, like a jaguar. Here, the jaguar's face and body shape are preserved for a while, but its spots begin to morph into eyes, which turn into faces. This is very instructive as to what the model prioritizes in classifying a jaguar, i.e., faces are king.

Now, what does a non-biased visualization look like? If we start with random noise or a solid color, do we expect the model to produce a final output that looks similar to the biased examples we've seen?

They all definitely look similar! Quick, without looking back, can you label each image with it's base image? Options are Sam, jaguar, random, and uniform.

No, the model wins out in the end!

In Part 3, we'll generate some of these images using a different model and see if that makes a difference in class visualization.